Backpropogation Neural Network

Creating and training a back propagation neural network in Java.

For one of the early Artificial Intelligence courses I was enrolled in, one of the largest projects was creating our own Backpropogation Neural Network. We were given the option to choose what language we created the network in, so I opted to use Java. The project was split into a few smaller assigments to get us moving closer towards a robust network. The first assignment was to train a network to be able to learn the XOR function, which is a relatively simple function to learn, and proved to be a good initial test to confirm we could create a network and implement the process of backpropogation.

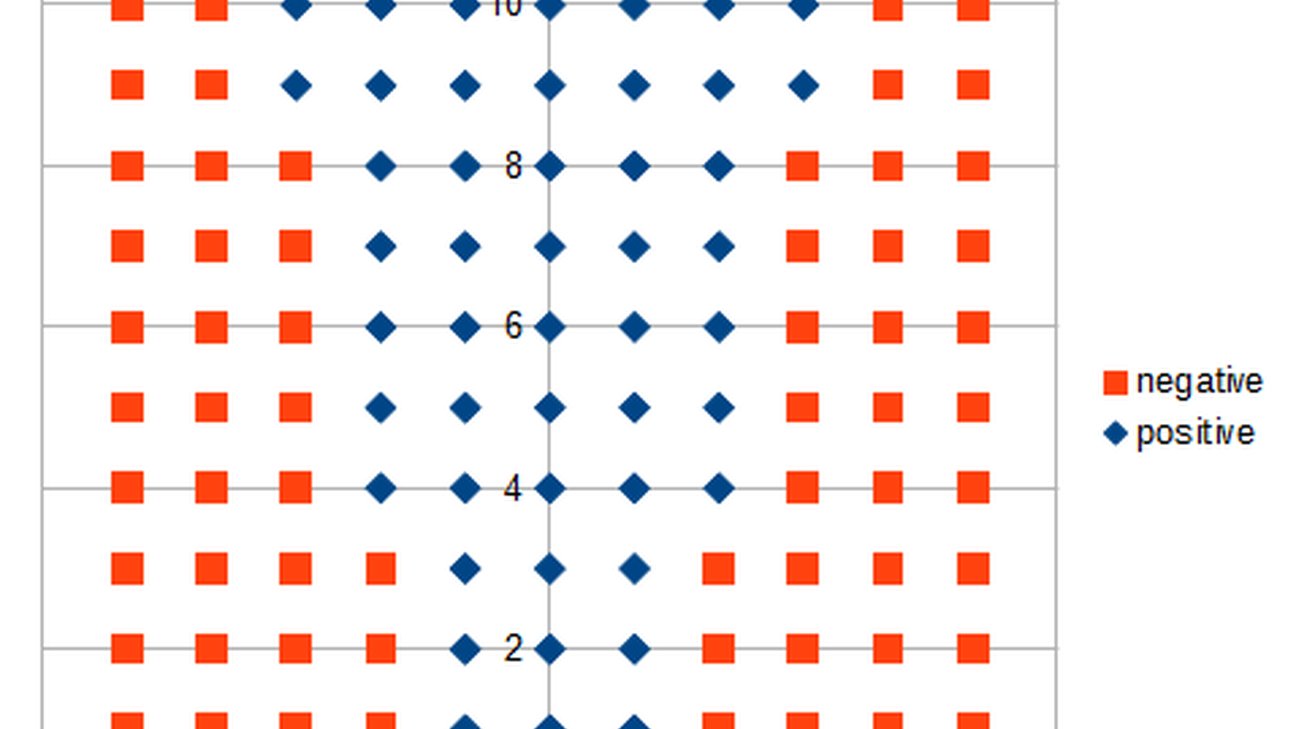

The second assignment was to train a network to learn the function y=x^2 (converted to a classification problem by asking whether a point was above or below the line y=x^2). This is similar problem to learning XOR, but requires much more tuning to get correct. We were not given specific guidelines on the parameters of the network such as number of hidden layers, perceptrons per hidden layer, etc. This second assignment involved tuning the different parameters, implementing concepts like random restarts, and expanding the size of network in order to converge on the training data, which was a set of 100 points. It wasn't necessarily a requirement for the network to converge, more that we needed to get close to classifying the full training set correctly.

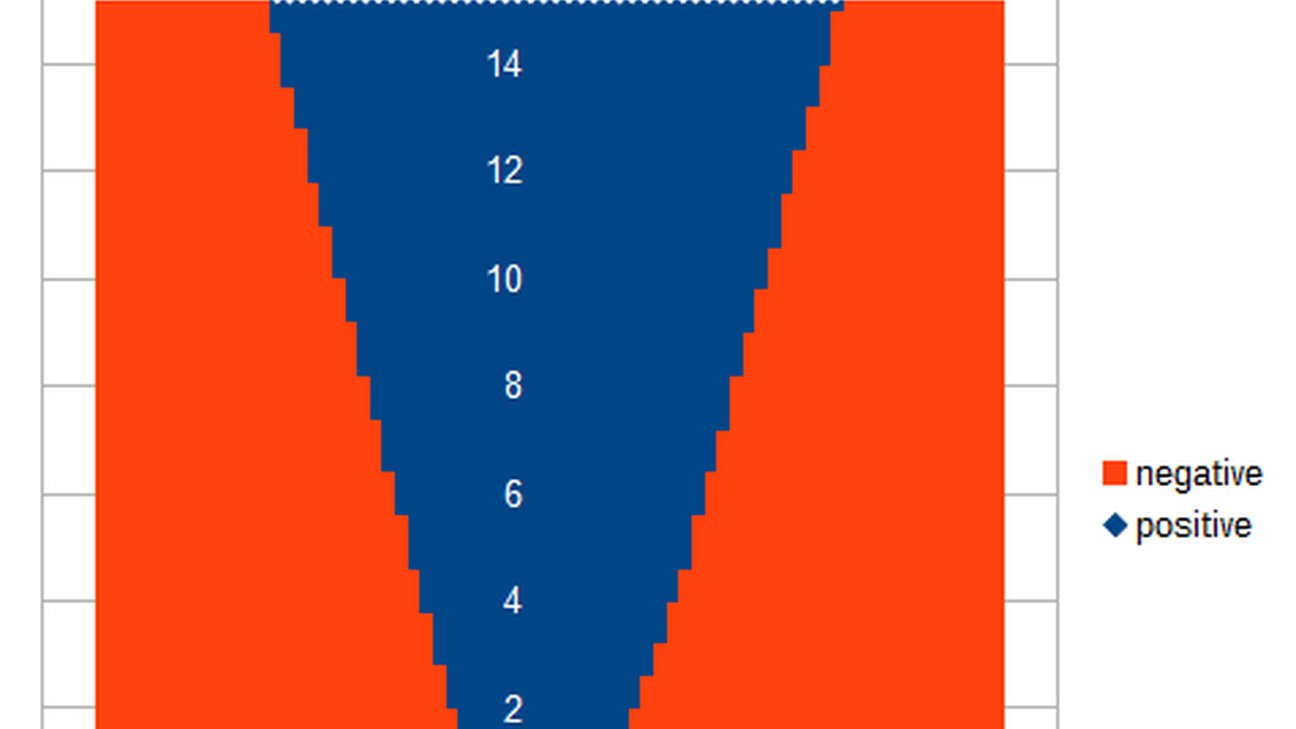

My network was able to classify all 100 training points successfully; determining accurately whether they were above or below the parabola y=x^2. The cool part of the project was then running our networks on 1000 other points not in the training data set, and seeing how it held up with testing data. The results looked reasonably close to a parabola, but with some of the nuances towards the point (0, 0) being lost (as might be expected, since there is more variance there).