A Face Detection Based Unity Game

Building a game controlled by only your face.

Can facial detection be used as an effective input device?

This was the question I wanted to try to learn about when I started this research project for my course in Immersive Virtual Environments. In trying to choose a term project for the course, I was having difficulty deciding if I wanted to do a game development project or an image processing project. My indicision lead to me eventually settling on combining both ideas together into a game drive by image processing, which would use the movement of a player's face as an input device. Using a webcam as an input device interested me, because it is a very constrained input device. Webcam video is often very low quality and low frame rate, so trying to take images in real time and convert them into input seemed like a challenging but worthwhile area to explore. Cameras have over time become a progressively more important input device with the advent of machine learning. Many manufacturing systems that, in the past, utilized complicated sensor arrays have moved to using image processing instead, because it is a cheaper and more versatile solution. For games in particular, the idea is worth exploring because nearly all mobile devices have a camera of some type, and the demand for high quality picture and video means that your input device is constantly getting improved and upgraded.

My Research

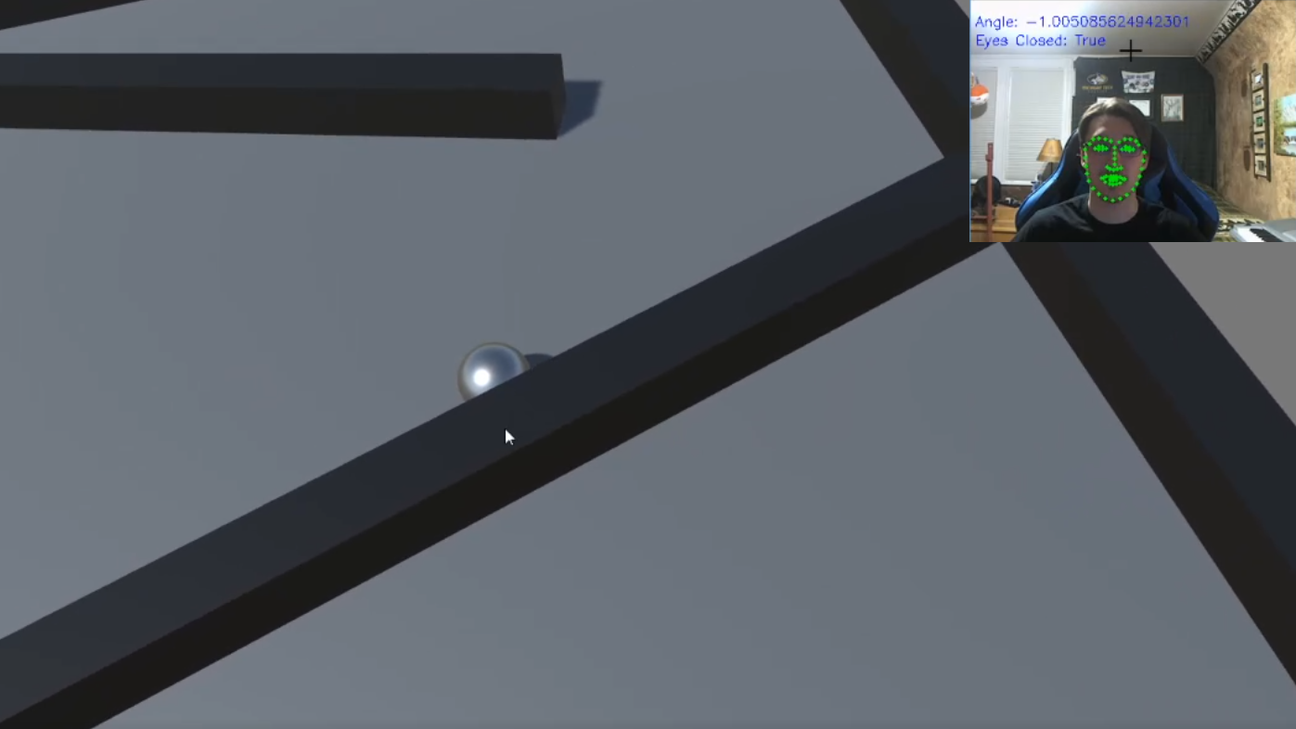

I accomplished a lot more with my research project than I had expected to initially. I decided to use the Unity engine to build the game, and after looking at candidates for facial detection I settled on using a combination of OpenCV and dlib. OpenCV is a program that offers a lot of basic image processing and manipulation functionality, and dlib is a machine learning library with some built in models for face detection. Using these two libraries in python, I started by writing an image processing pipeline that I could use to control my mouse cursor by moving my face. Separately, I developed a simple physics based "platforming" game with a ball as the main character. One of the key challenges in the project was connecting these two isolated projects together, which I accomplished by writing a server and client to communicate between the two. The image processing pipeline constantly sends data over to the game client, and each frame the game polls the input from the camera. I implemented some smoothing and filtering techniques on top of this, to try and get rid of a lot of the noise that is inherent in doing facial detection. The end product turned out to be a very basic game, which I could control by tilting my head, and with some very rudimentary detection on the eyes I could somewhat accurately detect when I blinked, to act as a button press. These results seemed very promising to me, and I could see there being applications for making games specifically for people with physical disabilities that make traditional controls problematic.