Generating Text with RNNs

Using deep learning techniques to generate text.

What is a Recurrent Neural Network?

A common way of describing Artificial Neural Networks (ANNs) is as function approximators. An ANN takes in a set of inputs, called a feature vector, and then based on a set of learned parameters produces an output. The parameters for an ANN are learned through a process of training using a dataset consisting of feature vectors that are associated with the desired output in known circumstances. The more effectively that parameters are learned by an ANN through training, the better the network approximates the outputs for feature vectors outside of the known training data.

A Recurrent Neural Network (RNN) is a variant of ANN that is designed to be applied on inputs that have a sequential or temporal relationship. RNNs are very similar to ANNs, but the network expects its inputs to be provided with a particular ordering, and the output of the network is carried forward in order to modify the network behavior for the next input in the sequence. Some subset of the parameters in an RNN are used for capturing the relationships that occur between each input in a sequence, effectively giving the network a form of memory. With an ANN you might take sensor readings at an instant in time, and use the readings to try and predict the state of the system at that instant. With an RNN you can take this idea further and use previous readings that you have seen in the past to influence your determination of the instantaneous readings, and use the combined effect of previous and current readings to predict the state of the system. This core idea of a memory or history of state is an example of a common pattern with neural networks, which is to identify local structure in a problem and abuse it to reduce the total noise and number of parameters that are needed to learn the problem well (the more structure you can capture in your implementation, the less your network has to learn). Because of this, RNNs are ideal for applications that involve sequential or time series inputs.

Turning an RNN Predictor into a Generator

RNNs do a surprisingly good job of taking a sequence, and making a prediction from it. How does this idea of prediction relate to generation though? The key to this is to be able to formulate the problem of an RNN predictor in a way that is recursive, meaning that the output of the RNN can be fed back into it as part of its input sequence. If you can formulate and train an RNN predictor in this way, then it is very simple to convert this predictor into a sequence generator instead. The first thing you need is a seed sequence, which is a sequence to kickstart the generation process; sometimes this is a sequence of particular interest, but it could also just be a null value or some type of sequence start character. Once you have a seed sequence, you can use it as an input into your RNN predictor to predict an output. After this prediction, you can modify your input sequence to create a new sequence that includes the predicted output, and perform a prediction on it to create another output. You can repeat this process to generate sequences of arbitrary length, thus turning your predictor into a generator.

Applications of Text Generation

The problems of text generation and more generally sequence generation are becoming increasingly important in the domain of language processing. Virtual assistant products, such as Google Home and Amazon Echo, utilize sophisticated variants of RNNs to solve a problem of sequence translation, by converting an audio waveform into an output sequence that contains a response to a query. In internet culture there have been recent examples of generating speech to simulate the voice of a particular person, or to generate deep faked videos. For text specifically, there have been applications such as automatically generating text for essays based on topic sentences, or generating entire stories from brief descriptions. The idea can also be helpful in detection problems that require large corpuses of text, since a generation technique can be used to artificially inflate datasets with reasonable data. It may be the case that some day programming will evolve to inputting a set of business requirements into a generative model, and asking for it to generate the necessary compileable source code.

My Research

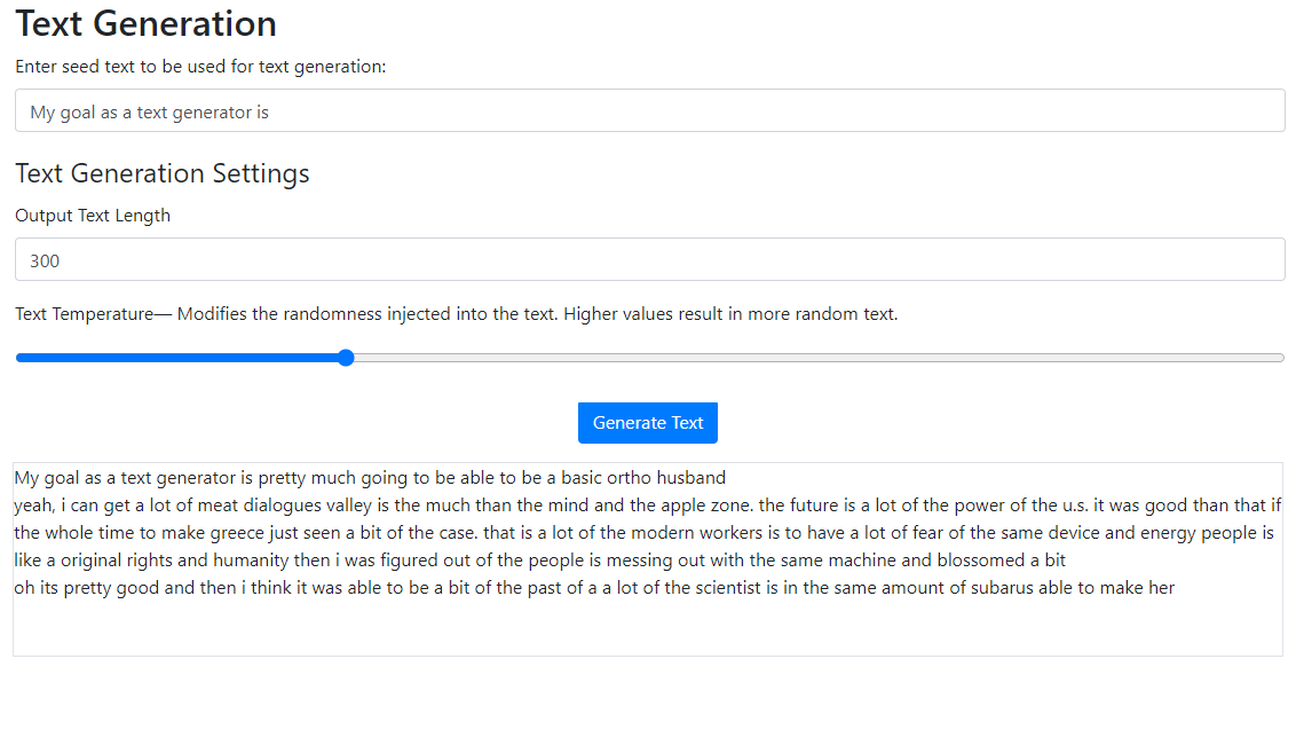

My research was more or less a survey of techniques in modern text generation, and a exploration of the literature. The specific project I had in mind was to build a text generator based on a corpus of discord messages I had written, to try and generate text that resembles my informal writing style. The goals for this project were to create a Django frontend that could be provided with an input seed text and generate text based on the seed text. With these goals in mind, the result was quite successfull, and I ended up with a webpage that could generate blocks of text of arbitrary length that vaguely approximated my writing style.

This project served as a very good avenue for learning the Tensorflow framework to build a machine learning model, and I derived a lot of value from the numerous journal articles I surveyed to learn of some of the cutting edge techniques being applied for the problem of text generation.